From Parental Control to Connection: Helping Our Kids Become Confident, Critical Thinkers in the Age of AI

How Parents Can Set Boundaries That Protect Without Stifling Growth — A Balanced Approach

“Can I have one more minute on my tablet, please?”, asked my daughter.

“No”, I replied immediately.

“But I am on my learning app!”, she protested.

Sounds familiar?

A lot of us parents, experience this balancing act daily: when to say yes, when to say no, and how to decide what counts as “good” screen time. I can’t help but think about the “tug of war” between parental control and nurturing my child’s digital agency.

How do we protect our children while helping them grow into confident users, (not just consumers), of technology?

As parents, we all strive to keep our kids safe and also raise them to be thinkers. Someone who can question, reflect, and make good choices for themselves.

This week, I want to explore how to balance safety with connection, why empowering our kids with critical thinking matters, and introduce guided agency as a potential path.

🤖 AI Concept of the Week: Adaptive Parental Controls

What it is: Instead of a physical effort to turn off or take away a tech device, parental controls setting allow parents to set timer of usage or block certain content on an app or device. Now Adaptive Parental controls take that ability into the next level. They adapt these settings based on a child’s age, maturity, and behavior patterns, creating a personalized guidance and real-time feedback. So, no more manually changing every setup. Parents supposedly can set boundaries that automatically evolve with the child, supporting gradual independence.

Why it matters for parents: While the tools can be powerful, they work best when paired with active parental involvement and not left on autopilot. These limits or controls alone cannot teach critical thinking or self regulations. It should be treated as a supporting tool and not replacement to parenting and the connection and conversation that comes with it.

💡 Reflective Bytes: Digital Dependency vs Digital Agency?

In our conversation, parental control often takes center stage. But what if the deeper goal isn’t just control, rather, cultivating true digital agency?

How do we shift from managing our children’s screen time to empowering them to understand when to stop or what’s worth their time?

Here’s what the researches say

Parental controls reduce exposure to online risks and ease parental stress,1 but overly strict, unilateral restrictions can harm trust and increase conflict.2

Children respond BETTER to active mediation strategies that involve open conversations, shared decision-making, and co-use of media than to authoritarian monitoring.345

Research suggests the healthiest digital habits develop when parents combine thoughtful boundaries with active engagement and respect for their child’s growing capabilities.678

Questions to consider:

Are my current rules protecting my child while allowing them to learn and practice digital skills?

How can I involve my child in decisions about their online life to build trust and agency?

Am I using controls as a tool for safety and learning, or as a substitute for ongoing dialogue?

What does “guided agency” look like in our family-balancing boundaries with opportunities to grow and make choices?

There are no perfect answers…

While in most cases stronger parental control is needed, I would like to invite us to pause and rethink our approach. Moving beyond managing screen time and focus on mentoring our kids to be responsible digital citizens.

I am not promoting unsupervised screen time but rather advocating the idea of sharing our values and teaching kids what’s safe, smart, and meaningful tech use looks like.

🙌Your Turn: What’s one screen-time rule in your house that works or doesn’t? Comment or reply. I’d love to hear!

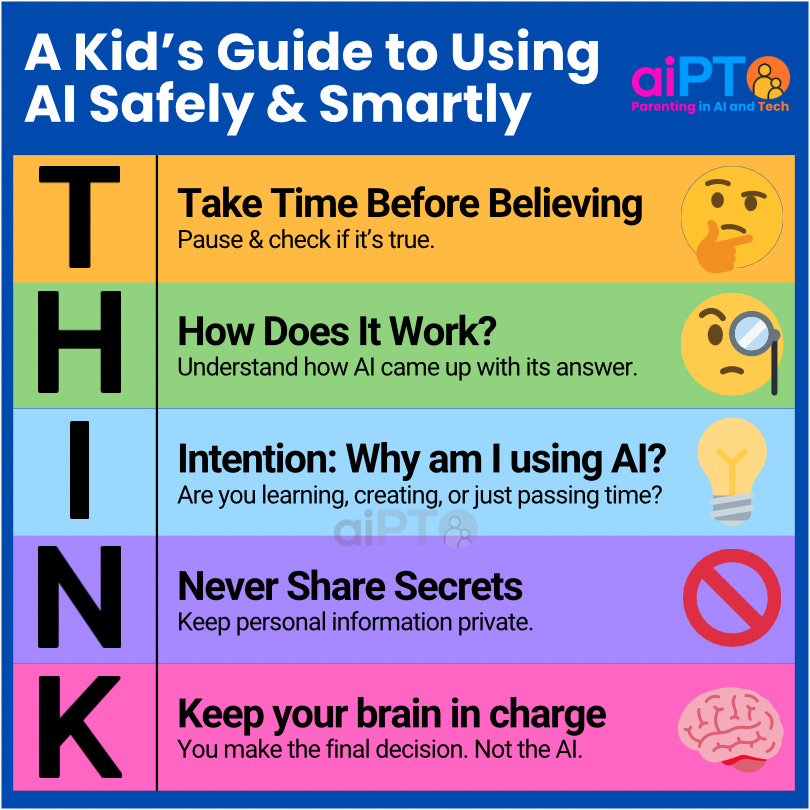

🤔 THINK framework spotlight: T for Take Time Before Believing

I previously shared about THINK: a kid-friendly framework to think critically about AI outputs here.

Building on our reflection on balancing parental control and growing need of agency, a key step we can start doing is teaching our kids to pause and critically evaluate information they encounter online, especially from AI sources. Encourage them to double-check the facts with other sources that they have, e.g. books, trusted websites, or a knowledgeable adult. The goal is to help kids learn the skills to question, reflect, and decide for themselves.

At the current point of this writing, (and potentially due the inherent nature of the unregulated online information), genAI can be and often is (unintentionally) wrong. Encouraging kids to “Take Time Before Believing” encourages metacognition (read: thinking about thinking) and self-regulation. In other words, promoting critical thinking and protecting them from misinformation.

🗂️ 5 Minutes Conversation Starter

Age 5-8:

After an AI tool generates content, respond with “That’s cool! How do you know it’s true?”

Or ask, “Who do you think made that story or picture or information? Let’s find the sources.”

Age 9-12:

During homework using AI, ask them, “That’s interesting. Where did you find that information?”

After they respond, follow up with, “”Who do you think created it? How else can we cross-check the information?“

Age 13+:

As they complete a homework using an AI assistant, “I am curious. What made you believe that is accurate?”

After hearing their opinion, follow up with, “Do you think it might be missing some information or considered all possible answers?“ or “How might AI influence what you believe is true?”

💪 Parent-Powered AI Digest

Real Laws. Real Risks. Real Conversations.

The Take It Down Act becomes law, protecting kids from AI-generated explicit images

Signed this week, the Take It Down Act is the first federal law making it a crime to create, share, or threaten to share nonconsensual intimate images, including AI-generated deepfakes. Online platforms must now remove such images within 48 hours of a victim’s request, with enforcement by the FTC.

What it means for parents: This law gives families a new tool to fight image-based abuse and deepfake exploitation, but it also highlights the importance of teaching kids about privacy, consent, and the risks of sharing images online. Let’s use this moment to start a conversation about digital safety and why critical thinking and asking for help matters if something feels wrong.

Miami schools launch the largest AI chatbot rollout for students in the U.S.

Miami-Dade County Public Schools, America’s third-largest district, is now providing Google Gemini chatbots to over 105,000 high school students. After previously banning AI chatbots due to concerns about cheating and misinformation, the district is now training more than 1,000 teachers and encouraging students to use AI for research, writing, and critical analysis.

What it means for parents: This may indicate a sentiment shift from AI as a homework shortcut to AI as a classroom tool. As parents, we need to ask our schools to share privacy policies and communicate regularly on the application of any AI tools. Consider leveraging our THINK framework to support our children to think critically about how they use AI in school.

AI Tools Flood Classrooms; Experts Emphasize Critical Thinking and Ethics

A new study from the University of South Florida highlights the rapid adoption of AI tools in Pre-K-12 classrooms, with over 1.25 million teachers now using platforms like TeacherServer for lesson planning and assessments. However, researchers and educators stress the importance of ethical integration and critical thinking, especially as many teachers remain cautious due to data privacy concerns and lack of formal training. The initiative is part of a broader movement to ensure that as AI becomes a classroom staple, students learn not just how to use these tools, but how to question, verify and use them responsibly.

What it means for parents: As AI tools become part of your child’s daily learning, it’s more important than ever to “Take Time Before Believing” the answers AI provided. Our THINK framework encourages kids to pause, ask questions, check sources, consider their privacy and reflect on whether an AI tool is helping them learn or just making things easier.

🔑 Bottom Line

Parental control is still a key foundation to our children’s safety. We must not forget that true empowerment comes from genuine connection and conversation.

AI, like any other technology, has its pros and cons, which we yet fully understand. I believe deeply on the importance of raising kids to be confident, capable and curious digital citizens. More importantly empowering fellow parents with the tools to do that.

Let’s TALK. Let’s THINK. Let’s teach our kids to pause, question and choose safely and smartly. One prompt at a time.

Thanks again, for being part of this journey through the AI era of parenting.

Dhani

https://digitalwellnesslab.org/research-briefs/safety-and-surveillance-software-practices-as-a-parent-in-the-digital-world/

https://eprints.lse.ac.uk/120219/3/Do_parental_control_tools_fulfil_family_expectations_for_child_protection_A_rapid_evidence_review_of_the_contexts_and_outcomes_of_use.pdf

https://pmc.ncbi.nlm.nih.gov/articles/PMC8978487/

https://pmc.ncbi.nlm.nih.gov/articles/PMC8536835/

https://onlinelibrary.wiley.com/doi/full/10.1111/chso.12599

https://onlinelibrary.wiley.com/doi/full/10.1111/chso.12599

https://cyberpsychology.eu/article/view/14126

https://cyberpsychology.eu/article/view/33751

Hey—thanks for this! I appreciate how it’s adapted to various age groups too. 💙